Human centred Generative AI video production workflows

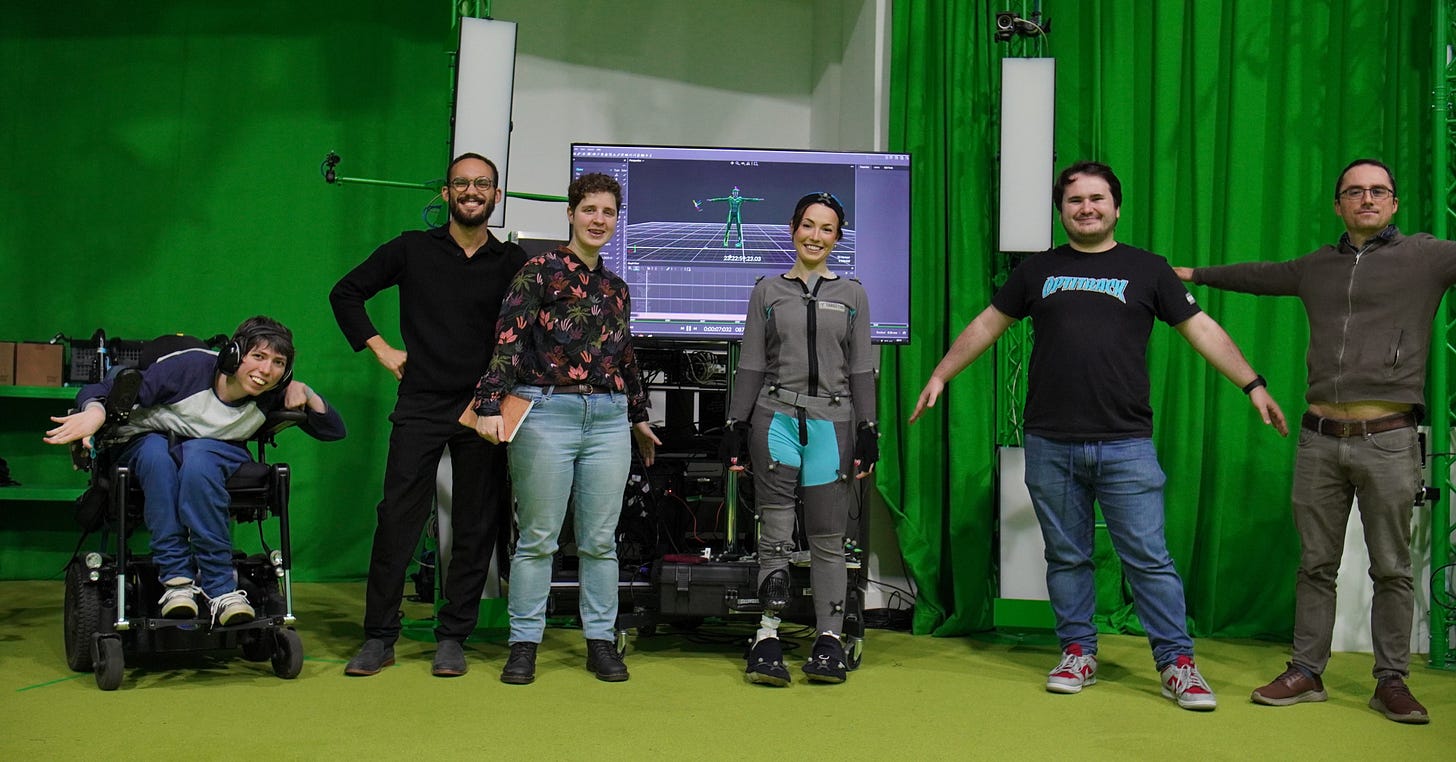

CoSTAR National Lab Prototyping team

In October 2025, the CoSTAR National Lab Prototyping team completed an applied research cycle we dubbed the ‘GAMMA’ project (Gaussian, Avatar, Motion, Mesh, and AI) which combined a range of techniques we have been exploring over the last year, and resulted in a promising demonstration of how far generative AI video can be taken using open source tools.

Our Motivation

Prompted by conversations with creative technology firms working in screen and performance, we set out to explore alternative forms of image and motion capture that would allow us to create a high-fidelity digital likeness of a real person, and to drive this avatar from motion performance captured from another actor. Applications of this could range from creating quick turnaround animated sequences as part of a pitch or previsualisation process, to developing high quality ‘digital stand-ins’ to aid with reshoots.

Whilst this can already be done using existing high-end scanning and motion capture technology, we wanted to investigate if emerging techniques could provide a way to do this in a more lightweight and democratised fashion, whilst preserving the high-quality video output we are looking for.

The research sits against a backdrop of rapidly accelerating interest in generative AI for video and performance production. Over the last year, the emergence of AI-generated performers has captured mainstream attention — for example, UK company Particle6’s AI-created actress Tilly Norwood, whose sudden rise to global fame prompted significant debate around digital talent, representation, and the future of acting.

What did we do?

Building on the success of our work on Gaussian Splatting (showcased at SXSW London earlier this year) we were confident that Gaussian Splat technology could provide the kind of fidelity and quality we were seeking for our ‘digital human’ use case.

We had previously adopted a stop motion approach for Gaussian Splat animations and wanted to explore other methods that might offer greater production efficiencies and flexibility. We tested Gaussian Frosting techniques which involve extracting a base mesh from the Gaussian point cloud and then attaching the Gaussian Splats back onto the surface of this mesh - much like a layer of icing or frosting on a cake. This would allow us to rig and animate the mesh, with all its attached GSplat beauty, using well established mesh-based production techniques. We think this approach is promising and we’ll be keeping a close eye on future developments in this space. At this stage, however, the technology did not retain enough GSplat fidelity during the frosting process and were also not able to achieve the fine-grained mesh control we were aiming for.

In this project, we therefore looked to generative AI for an alternative approach, with the team developing a bespoke series of tools and workflow, including the use of fine-tuned LoRA (Low-Rank Adaption) models trained on actor’s likenesses. This was then driven by motion extracted from a video of a separate human performer. Another process involved using generative AI to ‘costume dress’ our digital avatar, providing more specific creative control of the output. This was achieved using open-source tools and AI models. All training and model usage was carried out in a secure sandboxed technical environment, ensuring data gathered from performers was protected from being used outside of the project.

The Creative Use Case: “Berti”

The project resulted in the production of a 4-minute HD film, Berti, about the extraordinary rise to fame of a mime artist. The film was designed as a short story featuring a range of film scenes, which aided our efforts to research production challenges and pipelines for different scenarios.

Creative and development on the project was led by Senior Creative Technologist Johnny Johnson. The character of “Berti” was produced from a digital likeness of the Prototyping team’s own Producer Cody Updegrave. Additional digital likeness was also captured of actor Anna Arthur. Maggie Bain, a specialist motion capture actor provided performance data used to animate these digital characters.

Behind the scenes

The film was created using a suite of open-source generative AI tools and models that were orchestrated into a bespoke production pipeline using ComfyUI. You can view a behind-the-scenes explainer video of how the film was created below.

For anyone inspired to dive deeper into our methodology, we have open-sourced the ComfyUI-based AI workflows in our GitHub repository, enabling AI-focused creatives - working at scale or in their bedroom - to develop their own AI film production pipeline.

What did we learn?

The capture of motion data from a performer and using it to drive AI video acting, supported by fine-tuned AI character models, sits right at the cutting edge of AI video developments.

Our image dataset for LoRA training consisted of a range of suitable images of the source actor in different lighting conditions. These LoRA models aided greatly in reproducing an accurate representation of the original actor’s likeness.

The motion extraction techniques provided consistent representation of the performance acting and allowed for specific control of our digital characters.

The production tools, based on several generative AI models working together, are complex and required a great deal of adjustment, fine tuning and repetition to achieve the desired high-resolution output for our short film.

In completing this project, we also explored a number of interesting legal and ethical challenges around how best to contract performers and protect their data such that they retain control of their creative input. In our case, for our performers we used an extension of a motion capture contract with additional clauses that stipulated the security of data within our production environment, tightly defined production usage plans as well as post-production data and model deletion plans. We were also mindful of the fact that, currently, copyright laws in the UK do not cover computer generated content, and it is therefore crucial to keep the ‘human in the loop’ during production such that we, as the creators, can assert ownership of the final output (in this case, the Berti film). This does, however, remain an important and open topic of debate in the bigger picture of AI and copyright.

Most importantly, we believe the work demonstrates the continuing and irreplaceable importance of human performance. At the National Lab, our Responsible AI Principles states that we: “Promote and respect human creativity by undertaking research that enables the creative sector to be more creative, develop and support talent”. We believe this is core to developing a vibrant and successful creative ecosystem. Our motion-capture actor’s skill, nuance, and interpretative choices were central to the production, anchoring AI-driven elements with emotional fidelity and human-centred qualities. We hope to continue to explore how production workflows and tools can be technologically empowered, ethically grounded, and driven by talent.

The Big Picture

Our research focuses specifically on how UK creatives can meaningfully integrate baseline AI services and models into their artistic and commercial workflows while retaining full control over their IP. This sits alongside work we have previously done with Sheridans and DECaDE (the Centre for the Decentralised Economy) on Time to ACCCT: a copyright framework of Access, Control, Consent, Compensation and Transparency, and is something we are actively looking to explore further in future projects.

What’s next?

An upcoming prototype cycle will build on this work by exploring, in greater depth, technical and legal frameworks to support creatives control of IP within the AI production landscape. This will connect our GAMMA work with themes at the heart of the ACCCT work.

An additional track of this project, which will be featured in a blog in the coming weeks, explored computer vision-based motion capture techniques of performers with limb differences, cerebral palsy, and wheelchair users. We will update on data we captured and our plans to use this to develop models that have more accurate and diverse digital representations.

Meanwhile, the CoSTAR National Lab is currently calling for companies to join us to explore emergent forms of advanced production that can deliver efficiencies or enhance production workflows in support of impactful audience facing experiences. Please see here for details about the call and how to apply. The closing date is the 15 December 2025.

About the Prototyping Team

The CoSTAR National Lab prototyping team is a small multidisciplinary team of researchers, producers, creative technologists, and developers from across the CoSTAR National Lab’s core partners: NFTS, University of Surrey, Abertay University and Royal Holloway, University of London. Our goal is to connect deep research themes with practical industry use cases. You can find out more about our team here.

Thank you to Branden Faulls, Cody Updegrave, Elliott Hall, Hazel Dixon, Johnny Johnson, Katie Eggleston, Lewis Connelly, Lisa Pearson, Lorna Batey, Miles Bernie, Violeta Menendez Gonzalez and Kirsten McClure.

Be the first to know about our calls and news by signing up to our newsletter here.

Impressive work on the GAMMA pipeline. The decision to pivot from Gaussian Frosting when it couldn't retain fidelity is exactly the kind of pragmatic choice that makes applied research actualy useful. What's really smart is how you're using LoRA fine-tuning alongside motion extraction to maintian control over the actor's likeness while still getting generativ flexibility. That combo is where most production pipelines are going to land once they move beyond the novelty phase.